This post is part of a fond farewell to the University of Bristol as I have now finished a very enjoyable 4 years as Enterprise Architect there and I have just moved on to the role of Senior Enterprise Architect at the University down the road – UWE! UWE are building quite a significant EA capability as from this year, recruiting 3 EA’s in one go, led by an Assistant Director of IT dedicated wholly to Strategy and Enterprise Architecture. It was an opportunity I couldn’t turn down, but it was a difficult decision to leave the University of Bristol and I will really miss my fantastic colleagues there. IT Services at Bristol has recently recruited an excellent new CIO, Darrell Sturley, and I think he will help improve all aspects of IT considerably over the next few years. I will watch with interest!

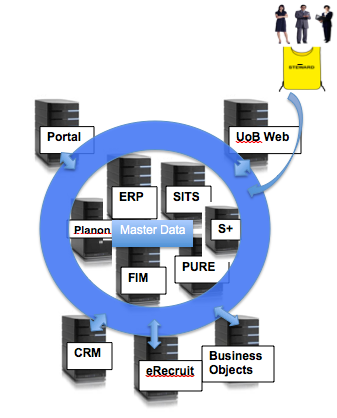

So, back to the real subject matter of this final post on my blog: what did we achieve through Enterprise Architecture in my fourth year in the role of Bristol’s EA? The two key achievements of this year were the successful launch of Master Data Governance at the University and the beginnings of a robust Technical Design Authority capability from within IT Services. I’ve blogged quite a lot about Master Data Governance so I will focus here on the major aspects I put effort into to enable governance of our IT Architecture – through a Technical Design Authority.

Technical Design Authority – how?

To establish a Design Authority capability I focussed less on ‘who’ should be ‘the’ authority figure, saying yea or nay to all proposed IT implementations and standards, and instead on how such a design authority should behave at the University. Or, put another way, I believe the tools and processes are as important as the roles and governance structure put in place for the TDA. I designed a process (including a form for people to complete) through which all IT Change Proposals can be submitted – big or small, and whether originating from within IT Services or from elsewhere (for example through the University’s business case approval process). But I was particularly concerned with the appraisal process being based not on educated guesses, personal views, gut feelings, or even how well presented a proposal might be, but instead on a formal evaluation of the proposal against three things: 1. clearly specified IT Architecture Principles, 2. an understanding of all Architectural Dependencies, and 3. agreed Target Architectures.

For this to work, documentation is needed. Over the year we created the following resources to use in the TDA process.

1. IT Architecture Principles

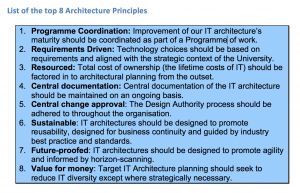

I was given a Technical Architecture team to work with in the last year of my role and this great group of technical experts and I came up with a set of draft principles that we felt were particularly pertinent to the University at this time:

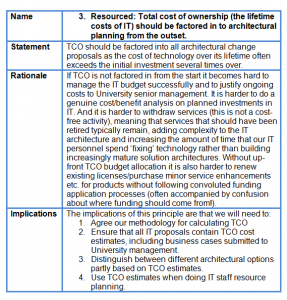

These top 8 drill down into sub principles, but we wanted this small number of summary principles so as not to bamboozle people submitting IT proposals! I should say that they were linked to higher level, overarching principles published by IT Services more generally. I am a big fan of how TOGAF encourages the writing of principles, so we then articulated each principle more precisely. I’ll give one example here (further articulation of the third principle in the list above):

It is interesting to note that the implications can often result in actions that need to be taken! Implication 3. is particularly relevant to the Design Authority decision making process.

2. Architectural Dependencies

It is all too easy for, say, someone in the Server Infrastructure team or the Databases team, to come up with an architectural improvement without appreciating the knock on effect on the applications architecture. Or vice versa. For example, upgrading to the latest release of Oracle or SQL Server would seem to be a good idea, but clearly not if there are business applications which for ever reason aren’t yet compatible with it and which might stop functioning correctly. Similarly, one area of the University might think it a great idea to purchase a new Web Portal to surface content from their system on the Web without realising they are duplicating another Web view of information offered via an existing system – ultimately creating an ill-planned web experience for visitors to our website and potentially confusion all round. I have encountered many many examples of where a lack of an holistic understanding of our IT architecture has allowed for bad decision making.

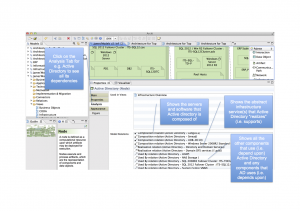

To counter this we drew up Archimate diagrams of our systems architecture. The Technical Architecture team and I received excellent training from Bizzdesign back in January in order to ensure we had several experts within IT Services able to maintain this documentation centrally going forward. We mapped out our entire infrastructure (virtualised and non virtualised server environments, storage, network, backup services, databases and so on). Then we mapped our critical services to it. Initially we used the free tool Archi to analyse our architectural dependencies, here’s an example (click to enlarge):

Then, after explaining to the Senior Management Team how valuable it is to be able to understand which architectural components are interrelated to others using a fuller-featured product, we were given the funding to purchase Bizzdesign Architect licenses. I explained that with good documentation we were also able to understand more about dependencies within our architecture generally – for example here I can show that our student administration system depends on a lot of infrastructure components; we can show very simply via the diagram that if the linux virtualisation platform supporting the web farm should fail, then the web client to the system (SITS:eVision) would fail too. However, the desktop client (SITS:Vision) would be unaffected so certain users would still be able to access the system via this.

From a design authority point of view, it is valuable to use these architecture documents to ask pertinent questions about the potential for undesirable knock on effects that might occur if a particular IT change is approved.

3. Target Architectures

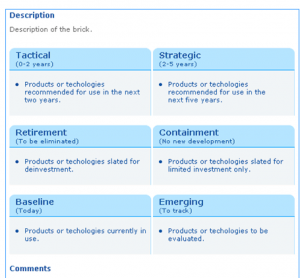

We could draw out target architectures using Archimate – these are useful. But I wanted something more accessible for those not familar with that language. I follow work by ITANA and decided to go with the Architecture Bricks approach they were evaluating at the time.

I developed a template that the NIH in the US were using at the time:

and the Technical Architecture team and I produced bricks for 20 major architectural areas we support. Here’s an example:

I ran a session with the IT Senior Management Team where we reviewed the 20 bricks together. It revealed key issues such as the number of retirement targets we had not planned for so far, and the number of – thus far uncoordinated – plans to move to Cloud services.

From a Design Authority point of view, once they have been evaluated holistically and approved centrally, the Bricks become ‘the bible’. They describe future plans and containment/retirement targets. Therefore, for example, if a proposal is offered that attempts to build a new service on something we’ve marked as a retirement target we can have a fully informed conversation about whether to recommend against that.

In this respect, the Design Authority isn’t functioning to try and stop all IT plans that run counter to the existing plans. Instead it is about making a clear and informed response about the implications to the organisation of deviating from the well thought out IT architecture plan.

So that’s my top 3 contributions to the establishment of a Design Authority capability at the University. There are several other governance aspects of course, but as I said earlier, I felt it was key to reduce ambiguous decision-making by having a clear decision framework within which to operate first and foremost.

Thank you for taking the time to read my blog and to all the colleagues from Bristol and around the sector that I’ve had the pleasure to work with in my role… I hope to work with many of you still as I stay in the HE sector in my new role! Bye for now!